Vozzy

The hackathon project

Before my internship at Datavant in summer 2020, my fellow interns and I were invited to participate in the company's Pandemic Response Hackathon.

We started work on the project during a time of quarantine. Most of us rarely left the house, and when we did, it was to pick up groceries or other essentials. When we were thinking about a direction for our project, we started to think about the dual forces of social isolation and falling business patronage. How would people find meaningful forms of community when conventional gathering spaces (pubs, bars, restaurants, diners, etc.) were closed? What would happen to bookshops, museums, cafes, record stores, and other small independent businesses who primarily rely on physical sales?

We wanted to address these challenges by building an authentic digital space for people to spend time together, much as they do in the physical world, while providing a way for businesses to keep promoting themselves through virtual means. We would provide a virtual platform for businesses and individuals to welcome others into their own spaces and events, such as museums, coffee shops, and parties, and make them feel like they're having genuine interactions. These large group gatherings and events would be shown on a real-time map, so users have the option to choose where they want to be and when they want to be there.

We noticed that many businesses were turning to virtual platforms, such as Zoom, to host large gatherings and meetings. However, the problem with these platforms is that they don't offer the natural ability to mingle around from conversation to conversation like one would in an in-person gathering (the cocktail party effect). Our app would provide a means for users to engage with others and their surroundings in a more organic way.

In the excitement of caffeinated evenings, late-night Zoom pair programming sessions, and take-out pizza which accompanies a virtual hackathon, we built our prototype. Along the way, we encountered a few interesting challenges which we hadn't tackled before. First, how would we mimic the experience of sound in the real world?

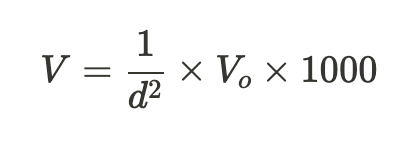

The sound-distance formula came in handy here as a way to dynamically scale the loudness of source audio streams based on the user's position. Below, V_0 is the source audio volume and 1000 is an arbitrary multiplication factor.

We used Agora.io SDKs for real-time audio streaming and adjusted the loudness of each stream in real-time based on the distance between individuals in the room. This mimics the natural way we perceive sound in the real world.

We wanted moving around the space to feel as fluid as possible. Each user has an avatar when they enter the space. Users can drag the avatar with their mouse to move around the space. We used Firebase to store the location of each person in the room and update them in real-time.

Finally, we used MapBox to display all the open spaces users can join on a world map.

Here's the video we submitted to the hackathon competition:

Taking a fresh look

After the hackathon finished, we returned to our daily routines. But I couldn't get the potential of what we'd built out of my mind. I wanted to see it to a usable product, beyond just the prototype stage.

In the spring of 2021, I was taking a sound design for theatre course. One of our assignments was to design a soundscape which would evoke a sense of physical presence in the listener. I started thinking more about space and sound, and how this might be prototyped in digital form.

I built a simple prototype with Mousetrap.js for capturing the user's keyboard, and Howler.js for managing audio. One cool feature of howler.js is its support for spatial audio. This means that not only is the volume dynamically adjusted when the user moves, but orientation towards the object affects their perception of the audio. This is accomplished by re-computing the stereo pan and volume of the audio track based on the following variables:

- orientation of the audio source (degrees)

- orientation of the listener (degrees)

- position of the audio source relative to the global listener (cartesian x, y, z)

Below is a prototype with a single speaker. While I wasn't able to capture sound in this screen recording, as you move closer to the speaker, the sound increases in volume.

Moving around the space with arrow keys felt more natural than dragging my avatar with a mouse. After all, human movement is incremental - you can walk or run, but never teleport.

Around this time, I started thinking about how I would design the next iteration of Vozzy. In this prototype, there would be no zooming or panning. You must manually explore the place. Furthermore, everything would be an object. This includes people, speakers, decorative objects, walls, etc. The "object graph" is the system by which objects are managed, rendered, and removed.

Objects would allow collisions. Whenever I go left/right/top/bottom, I create a shadow of myself and check for intersection with an object. On collision, we still run 20% of the animation to get a "bump" effect. If no intersection, then I can proceed...

The camera follows me only when I leave the viewport, then it pans over accordingly. This lets the viewer develop some spatial positioning within the "room" they are in, similar to the dynamics of a game.

Based on the absolute position of the object, the camera decides whether it should bother to do anything. Generally, the Object Manager will instantiate an object when it is inside the camera's scene or within 20% in any direction outside of it. Whenever the person moves, the Object Manager will re-assess the scene and see if any Objects should be detached. The advantage here is that only the relevant objects are ever loaded, and thus only they will have attached event listeners, making for a speedy user experience.

Let's break down the components of the above diagram:

-

os: This is the "operating system" of Vozzy. It handles:- UI sounds

- the command bar

- keyboard handling

- toasts and notifications

- alerts and confirm popups

- right-click menus

- audio stuff, including mute, unmute, managing WebRTC streams, etc.

-

scene: Manages the interactions of objects in Vozzy. It handles:- Calculating spatial things, i.e.:

- Calculating the overlapping area between two rectangular objects given their coordinates

- Getting the absolute x and y of an object based on the relative viewport of the scene

- Getting the absolute x and y of an object based on the relative viewport of the scene

- Calculating the distance between any two bounding boxes

- Creating a bounding box given an x, y, width, and height

- Getting a new boundary box with a given boundary box and x and y offset

-

Panning the scene based on my location (

os.object.get('me')) - Garbage collection for objects outside of the scene (offload objects which are no longer in the scene or nearby)

- Handling object mutations (i.e. adding, updating, deleting)

- Calculating spatial things, i.e.:

-

object: this is the base class on which all Vozzy objects (me, you, a chair, a desk, a speaker, etc.) are created. Its interface includes:-

load()Load the object's data from the database into memory -

render()Create the object element on the canvas -

attachInteractivity()Attach event listeners to the object for dragging, clicking, etc. -

bump()When the object collides into another (hard) object -

contextmenu()Right clicking on the object -

beforeUnload()Any rendering/confirmation before the object is removed from the scene -

edit()Interfaces with the OS to provide an editing UI for the object -

edit()Interfaces with the OS to provide an editing UI for the object -

paint()Paint the object -

move()Move the object in the scene -

sync()Sync the object (uses themessengerinterface) -

getDistanceFromMe()This method helps us easily find the distance between a given object and the user. -

distanceFromMeChanged()Triggered whenever the distance between the object and I changes, after some threshold and until another threshold (distance or event)

-

-

messenger: is used for sending multi-purpose messages across a specified channel ID. It exposes a subscribe and send interface, and manages subscriptions internally.

The underlying philosophy here is that Vozzy is quite opinionated over the mechanics of object interaction and management but is uninterested in the shape, design, and interactivity of an object itself. That's for the object creator themselves to decide!

The goal was to mirror the physical delineations of object interactions in the real world. After all, a radio manufacturer can't alter gravity, but they can control their radio's weight and appearance.

So, let's put it all together. Here's a screen recording of me adding some chairs in a Vozzy space:

And adding a radio to play music for everyone in the space:

You interact with objects by bumping against them. Here's me muting the radio by bumping into it: